- #Pytorch cross entropy loss how to#

- #Pytorch cross entropy loss install#

- #Pytorch cross entropy loss code#

Luckily, all of the above-mentioned packages are pip-installable! $ pip install torch Finally, we will be using matplotlib to plot our results! We are also using the imutils package for data handling. To access PyTorch’s own set of models for vision computing, you will also need to have Torchvision in your system. To follow this guide, first and foremost, you need to have PyTorch installed in your system.

#Pytorch cross entropy loss code#

Without further ado, let’s jump into the code and see distributed training in action! Configuring your development environment Finally, each instance creates its own gradients, which are then averaged and back-propagated amongst all the available instances. The data is then batched into equal parts, one for each model instance. Once nn.DataParallel is called, individual model instances are created on each of your GPUs. This is known as Data Parallel training, where you are using a single host system with multiple GPUs to boost your efficiency while dealing with huge piles of data.

#Pytorch cross entropy loss how to#

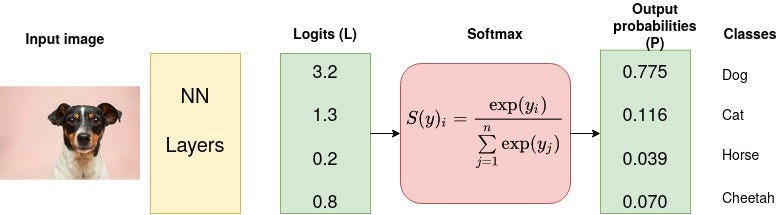

To learn how to use Data Parallel Training in PyTorch, just keep reading.įigure 1: Internal workings of PyTorch’s Data Parallel Module. A clear vision of your goal while traversing through PyTorch’s verbose code.An idea on implementing Data Parallelism.A clear understanding of PyTorch’s Data Parallelism.After completing this tutorial, the readers will have: Today, we will learn about the Data Parallel package, which enables a single machine, multi-GPU parallelism. One of PyTorch’s stellar features is its support for Distributed training. Perhaps Java also had the same intention, but I’ll never know since that ship has sailed!ĭistributed training presents you with several ways to utilize every bit of computation power you have and make your model training much more efficient. Granting you a more definite grasp over every step you take, PyTorch gives you more freedom. The reason why it is more verbose is that it lets you have more control over your actions. However, after a while, the beauty of PyTorch started to unravel itself. Having been accustomed to hiding behind TensorFlow’s abstractions, the verbose nature of PyTorch reminded me exactly why I had left Java and opted for Python. Although to be very honest, I had a rough start. As someone who used TensorFlow throughout his Deep Learning days, I wasn’t yet ready to leave the comfort zone TensorFlow had created and try out something new.Īs fate would have it, due to some unavoidable circumstances, I had to finally dive into PyTorch. When I first learned about PyTorch, I was quite indifferent to it. Introduction to Distributed Training in PyTorch (today’s lesson).PyTorch: Tran sfer Learning and Image Classification(last week’s tutorial).

0 kommentar(er)

0 kommentar(er)